The International Tinnitus Journal

Official Journal of the Neurootological and Equilibriometric Society

Official Journal of the Brazil Federal District Otorhinolaryngologist Society

ISSN: 0946-5448

Google scholar citation report

Citations : 12717

The International Tinnitus Journal received 12717 citations as per google scholar report

The International Tinnitus Journal peer review process verified at publons

Indexed In

- Excerpta Medica

- Scimago

- SCOPUS

- Publons

- EMBASE

- Google Scholar

- Euro Pub

- CAS Source Index (CASSI)

- Index Medicus

- Medline

- PubMed

- UGC

- EBSCO

Volume 24, Issue 1 / June 2020

Research Article Pages:7-14

Tinnitus: An Abstract View Emphasizing Signal, Noise, and Their Discrimination

Authors: Uwe Saint-Mont

PDF

Abstract

Most research on the hearing process and its pathologies is biological in nature. However, engineers work on similar problems, in particular signal detection, efficient information processing and noise suppression. On a more abstract level, hearing can also be treated as a discrimination task, something which is well known to statistics. The goal of this note is to explore the implications of these more abstract ideas: It merges that the signal-to-noise ratio and the construction of a suitable discrimination function are crucial elements of the hearing process. Hopefully such a theoretical point of view may facilitate a deeper understanding of the mechanisms involved. Perception fallacies such as phantom sounds or optical illusions are not just interesting in their own right; they open up a direct path to the deeper workings of perceptual processes. In order to be accessible to researchers in the field, this note is rather non-technical

Keywords: Signal-to-Noise Ratio, discrimination task, neuronal plasticity, noise damage, bias

Introduction

Signal versus Noise

At first glance, statistical analysis of a set of data and the efficient processing of sensory input do not have much in common. However, there is a fundamental similarity: in both cases, a major part of the challenge consists in finding meaningful signals in a sea of noise.

For instance, every second our ears are exposed to all kinds of auditory input. An immense number of frequencies, sounds, voices, etc, are typically interwoven in a complicated way. Thus it is a basic task of any hearing system to filter, i.e., to analyse this diverse input and to find the information hidden within it. One could also say that the basic problem of any sensory system is to distinguish between signal and noise, i.e., to draw a meaningful line between relevant information and ‘the rest‘.

Since the visual world is complex, but well ordered (shape, color, motion, etc.) this may be a rather straightforward task for the eyes and the occipital cortex, where visual input is mainly analyzed. With auditory input, however, this is quite different. Of course, there are basic parameters in this case too (frequency and intensity, in particular), yet many sources may produce sound at a given time: our body, voices (our own and those of others), natural and artificial objects-from animals, the elements and musical instruments to machines, traffic and weapons. In other words, the typical input is a mess, and it is a formidable task to isolate useful information in this muddle of potentially interesting input.

Similarly, statistics is also concerned with information, and more specifically, the basic problem very often consists in finding relevant chunks of information in a mass of raw data. We live in a noisy world, and thus a basic task consists in developing robust ‘de-noising’ procedures, i.e., devising algorithms that are able to detect the important part(s) of the input in a dynamic environment.

Since the 1930s, the field of communicating and storing information has surged, and engineers and computer scientists have been concerned with the “extractions of signals from noise” [1] To this end, they defined the signalto- noise ratio (S/N or SNR) which is the ratio of signal power to noise power, and constructed various classes of filter - most notably the matched filter, linear continuoustime filters, and the Kalman filter [2]. Each of these filters-note the name-is endowed with a specific perspective, i.e., it extracts a particular kind of signal. Technically speaking, such a filter maximizes its S/N ratio, which is tantamount to minimizing background noise N, thus identifying the information in the data [3-5].

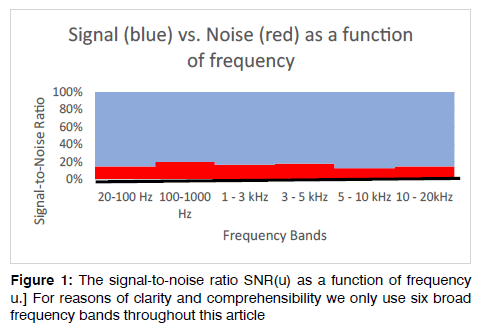

Apart from intensity, the most important ‘order parameter’ of sound is, of course, frequency [6,7]. Therefore the core of many (if not most) filters consists in a so-called transfer function y=t(u) that describes how the filter’s output y depends on its input as a function of frequency. If the system works properly, one should get the following result [8-10] (Figure 1).

Hearing: A Discrimination Task

A slightly different, but also more precise, view is that the basic task consists in decomposing the input into relevant signals and irrelevant noise. That is, one has to separate ‘real’ information from disturbing ‘random’ fluctuations that carry no information. Thus the black line in the last illustration, indicating that the separation of signal and noise is (almost) perfect [11].

Much more generally, the classical ansatz of error theory is the decomposition "observation = truth + error” [3], p.190. The upshot of modelling consists in finding a structure that is, in a sense, ‘close’ to the data, i.e. a “model that fits” that set of data, in particular in the sense that “data=fit + residual [4], p.595. Nowadays, computer scientists and statisticians often just state that

Data = Signal (S) + Noise (N)

In other words, given this perspective, the main task consists in distinguishing the latter components, i.e., in discriminating between signal andnoise. Straightforwardly, given input (reality) and output (personal perception), one arrives at the following table. (Table 1).

| Personal Perception | ||

| Reality | Signal | No Signal |

| correct | Error of the second kind (hearing loss) | |

| Error of the first kind (tinnitus) | correct | |

Table 1: Errors of both kinds.

Firstly, we do not make a mistake if the filter provides us with relevant information, i.e., if we detect a signal that really exists. Secondly, we are right if we do not perceive noise. That is, the filter(s) used by our auditory system is (are) able to detect and suppress irrelevant noises [14].

However, there are two basic kinds of error: On the one hand, we may not be able to perceive a signal that exists. Consistently, since the signal is not transferred to conscious perception, this deficit is denoted “hearing loss”. In statistical jargon, it is an error of the second kind. On the other hand, we may hear some signal that has no equivalent in reality, i.e. a “phantom sound” which is the definition of tinnitus(and an error of the first kind in statistical terms).

Making mistakes

The “hearing task” becomes more difficult, if

• SNR(u) is small for certain frequencies or frequency bands,

• SNR(u) is not smooth

Quite obviously, the higher the signal-to-noise ratio, i.e, the more information there is relative to irrelevant noise, the easier the auditory task of distinguishing signal from noise. However, if the channel transmitting information is error-prone, the task of telling signal from noise becomes exceedingly difficult: It is easy to converse with somebody in a silent room. However, it is not easy to communicate on a cell phone in the streets of a busy city, i.e., in a noisy environment.

For the second case, note that the hearing system has to synthesize “a sound” general aural impression. To this end, based on SNR(u), it has to extract and integrate the information in the data. For instance, given Illustration 1, a rather elementary discrimination function suffices (see the black line - a constant function - in that diagram). However, if SNR(u) is not smooth, frequency bands must be treated differently which is much more difficult and thus error-prone than processing all the input in a homogeneous way. In particular, it is not easy to cope with sudden changes or discontinuities of SNR(u).

In a lifetime perspective, our sensory capacities first grow (we learn to hear and listen) until they reach an optimum. Later, our sensory systems deteriorate, making mistakes more probable. Since deafness and phantom noises are two basic kinds of error, and aging is a continuous process, these symptoms should be rather common and become more frequent with age. Indeed, epidemiologically, hearing disorders increase with age and are very common- more than one third of the population experience tinnitus-like symptoms (overall prevalence is about 10% [5], and many elderly people require an acoustic instrument such as a hearing aid. Moreover, wrong or missing personal perceptions are linked closely to physiological decay- about 90% of those with tinnitus suffer from hearing loss too [6].

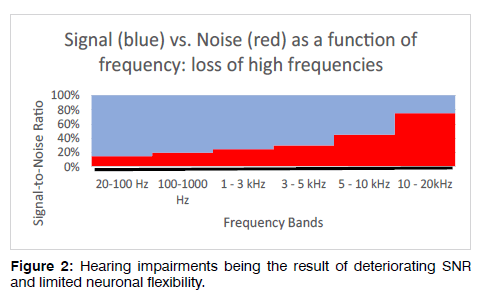

Since frequency is proportional to energy, and cells in the cochlea that process high-frequency noises are located closest to the eardrum (i.e., all sound waves pass through them), it seems to be no coincidence that these cells are the most vulnerable and that, typically, we lose our ability to detect high frequencies first. Moreover, if, in addition, the flexibility of the discrimination function is limited, one gets the following result (Figure 2).

In the illustration, the signal-to-noise ratio decreases with frequency. Thus the discrimination function should increase. Modelling a rather fixed or `settled’ hearing process, a linear function a with small slope seems reasonable. The blue area below the function signifies hearing problems, whereas the red area above the discrimination function corresponds to high-pitched phantom sounds. That is, the very difficult discrimination task in the highest frequency band leads to a systematic bias there.

Thus the diagram explains elegantly, why high-frequency tinnitus is commonest which is also reflected in the etymological derivation of ‘tinnitus’ (Latin: tinn?re, English: to tinkle; tin - making a rattling noise - also seems to be related) [7] It also explains why, given that a good part of hearing consists of a discrimination task, deafness and tinnitus are intimately related: within a certain range of flexibility, one cannot approximate a complicated ‘signal- to-noise landscape’ error-free.

Note that it takes a long time for deafness/tinnitus to become noticeable or a nuisance: Problems start when SNR decreases (i.e., the red area becomes larger). If this happens slowly, there is plenty of time for adaptive measures, in particular, a deficit-specific reorganization of the brain (i.e., formally, the discrimination function changes its shape). However, with decaying “hardware” and neuronal networks reaching the limit of their plasticity, deafness and phantom sounds become more prevalent.

The auditory pathway

Quite obviously the hearing process spans from the ear to the brain; frequency and volume being key parameters of sound. Consistently, the analysis of sounds starts with the decomposition of acoustic input into distinct frequencies [8]. To this end the cochlea is composed of frequency-sensitive segments that are able to measure sound intensity per (small) frequency band. Therefore it seems to be a good approximation to interpret the red and blue areas in the diagrams as the output of the peripheral analysis, with the ratio blue/red representing the overall quality of this output.

Further analysis and synthesis takes place in the primary auditory cortex and associated areas. In particular, fitting an optimum discrimination function requires extensive computational resources that are only available in the brain. Thus the black line in each of the diagrams would be a major result of the subsequent central data analysis. For instance, speech recognition is the task of Wernicke’s area in the left hemisphere. Since sensory nerves cross over to the other side, language is better understood with the right ear, and tinnitus is more common on the left. [9]

Since deafness and/or tinnitus are consequences of the malfunctioning of the auditory pathway, any pathological change in the peripheral and/or the central hearing system may result in hearing impairment. Important ‘central’ examples are neurological diseases (e.g., meningitis), cardiovascular conditions (e.g., hypertension), endocrine disorders (e.g., diabetes mellitus), but also ototoxic medication and stress. These quite diverse factors may have in common that they are all able to reduce the brain’s ability to process auditory information, [10] making it more likely that the computational burden exceeds the brain’s capacity. Stressed beyond its limits, the hearing process may falter or break down, resulting in severe handicaps, e.g., sudden deafness or intense phantom sounds.

Looking at the link between peripheral and central information processing, it is surely no coincidence that “sensory experience and auditory cortex plasticity are intimately related” [11], so much so that visual deprivation causes responses in the auditory cortex, [12] and sound deprivation may lead to permanent hearing loss [13]. Moreover, there is evidence that at least some subtypes of tinnitus start with a surplus activity of auditory cells in the inner ear that extends to the thalamus and the cortex [14-16].

With deteriorating SNR, and thus an increasingly difficult discrimination task, misclassification becomes more probable, and errors of both kinds must increase. In the extreme, S/N = 0. Naively, due to a lack of signal, one would expect complete deafness. However, Table 1 suggests otherwise and the empirical evidence agrees: typically, cochlear nerve section does not eliminate but rather promotes tinnitus [17-19]. This result also demonstrates that tinnitus is not necessarily peripheral. If it were, it could be removed like a necrotic limb. However, the opposite seems to be true: “In the past decade, animal model studies have indicated that most cases of chronic tinnitus… develop… when the brain loses its input from the ear”. [19] Norena distinguishes between ‘peripheral- dependent’ and ‘peripheral-independent’ central tinnitus [18-20].

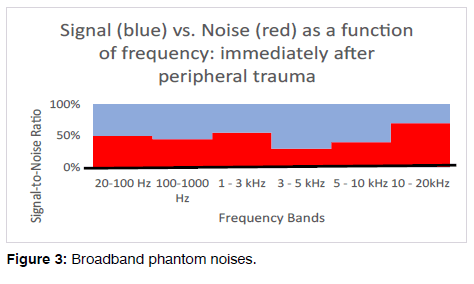

Trauma

What if SNR decreases within a short period of time, standard examples being ‘peripheral’ infections or acoustic trauma, damaging the acoustic cells in the ear? Since valuable input is missing, this may result in acute hearing loss (due to the immediate damage, an external stimulus has to be considerably stronger than before in order to produce the same subjective impression). However, since most of the auditory process has not changed, it is also likely that at least a part of what was formerly ‘signal’ and what is now ‘noise’ may be interpreted as meaningful information. Making the same distinction as before (see the black line in the next illustration which is equal to the function in Figure 1), inevitably renders meaningless noise into bewildering signal (the red areas above the black line), i.e., an error of the first kind occurs (Figure 3).

Since the ear distinguishes between frequency bands, rather broad damage should result in vague shifting sounds or broadband rustling. Yet narrow damage, affecting only a few frequencies (e.g., due to a cochlear dead region) should result in a definite phantom sound, such as buzzing or hissing.

After trauma, healing processes set in. In the best case, they eliminate anatomical defects, and we revert to the physiological situation. However, components may have been damaged beyond repair, and some frequency bands may have been `hit’ harder than others. In particular, for the reasons given above, cells processing high frequencies seem to be more vulnerable. Thus it is no contradiction that broadband impact noise can result in a high-pitched hearing deficit.

In any case, the following strategies are reasonable

• Elevating SNR(u): Increased neuronal activity indicates that the damaged system tries to amplify the remaining signal. [14]. [21-23]

• Adjusting the discrimination function: After an external shock, neural networks need to adapt to the new situation, and they seem to reorganize quickly [15,16,24,25]

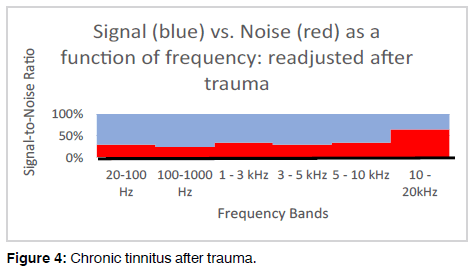

if these adaptive measures succeed to some extent, the following result should be quite typical (Figure 4).

In a nutshell, larger blue areas indicate that SNR has increased, but considerable damage remains in the highest frequency band, leading to a discrimination task that cannot be solved without error. Apart from this exception the fit of the discrimination function has become considerably better. Thus it seems to be correct that “…most investigators conceptualize [tinnitus] as the result of the auditory pathway employing compensatory adjustments that increase neural gain and promote central disinhibition in response to a hearing disorder” [26].

However, the popular term “maladaptive plasticity” [19] may be misleading: Owing to incurable lesions and limited neuronal plasticity, some degree of deafness and/ or chronic tinnitus can be inevitable (e.g., if SNR is too low). Even if all measures succeed: hearing is a difficult discrimination task that can only be solved with a certain amount of resources. If the system does not have these resources a perfect solution is no longer feasible, merely an approximation with some degree of error: partial recovery implies imperfect hearing capacity.

Of course, maladaptation aggravates the final condition and might be quite common, since it is indeed difficult to readjust a distorted system. On the one hand, lack of adaptation leaves a considerable hearing disorder; on the other hand, exaggerated adaptation results in `order from noise’, i.e., persistent hyperacusis and phantom sounds which are also highly correlated [17]. For instance, irrelevant input, e.g. minor muscular strains or mental tensions, may be noticed.

Hyperacusis demonstrates convincingly that the hearing system’s capacity to deal with input in an appropriate way has shrunk: Faint sounds must be boosted, yet stronger sounds are perceived way too loud, leading to a narrow(er) range of acceptable input. Although such an effect looks as if a badly deployed, error-prone system were responsible, hyperacusis may also occur in an optimally readjusted system - given the post-traumatic state of affairs, allowing only for a small ‘tolerance interval‘.

In short, although neural networks are rather robust, systematic biases are hard to avoid if the sensory system -the inner ear, in particular - has lost many of its resources, resulting in permanent hearing loss and/or tinnitus. Adaptive measures, in particular lack of sensitivity and/ or hypersensitivity may also easily generate systematic errors. Thus chronically affected auditory perceptions are rather the rule than the exception. From an engineer’s perspective, a systematic bias is the more probable the more sudden the damage (leaving no time for adaptation), the smaller the remaining overall signal-to-noise ratio, and the more complex the resulting function SNR(u).

With modern diagnostic tools, it is rather straightforward to detect a major ‘hardware fault.’ However, minor ‘software damage’ is difficult to uncover which implies that one needs to consider the entire auditory pathway and watch out for rather subtle defects in order to uncover a hidden hearing loss. Since about 10% of all tinnitus patients have a normal audiogram [22], there still seem to be tinnitus subtypes that standard diagnostic tools miss [27,28]

Therapeutic strategies

Since SNR seems to lie at the heart of many hearing disorders, it is straightforward to improve the signal-to- noise ratio. The more information reaches the brain, the better. Consequently, physical damage should be treated as quickly as possible, and patients should avoid all kinds of strain, especially- though perhaps surprisingly- silence, i.e. situations with hardly any signal.

Next, it is easy to amplify potential signals with the help of hearing aids. In extreme cases, cochlea implants can restore the signal transmission path if the inner ear has been destroyed beyond repair. [17,19]

In the case of a one-sided disorder, it also seems advisable to collect acoustic information on the healthy side and transmit it to the impaired side, using the contralateral pathway [29]. Technically, a straightforward solution consists of a pair of communicating hearing aids: A microphone on the impaired side transmitting information to a speaker on the other side, possibly adjusting for the lateral difference.

Instead of merely amplifying the signal, one may also try to reduce noise. In order to eliminate external background noise, headphones use active noise control (anti-noise). Of course, internal buzzing or hissing cannot just be switched off, but masking devices that produce additional input help to diminish disturbing phantom sounds.

Rather counterintuitively, the signal-to-noise ratio can also be increased by adding white noise, i.e. a random mixture of many frequencies, all having about the same intensity. This phenomenon is known as stochastic resonance, since the original signal’s frequencies resonate with the corresponding frequencies in white noise. Thus these specific frequencies are amplified while the rest of the noise remains unchanged, making the original signal more prominent. Consistently chistensen, et al. has recently been able to show that “background white noise increases the discriminability of spectrally similar tones”. [30]

Adapting this idea to specific disorders is a logical step. Depending on the kind of disorder, various types of colored (pink, blue, grey, red,…) noise could be suited better. If SNR(u) is `bumpy’, it may also be useful to adjust the level and the quality of external stimuli, in particular in the frequency bands with low SNR.

More generally speaking, any treatment, mechanism or device that helps the brain discriminate between information and meaningless noise could prove helpful. At the very least, it seems much more likely that a deliberately chosen acoustic environment rather than silence or excessive noise helps the auditory system recover. For example, Sturm et al. used acoustic enrichment, i.e., “moderate intensity, pulsed white noise”, to prevent auditory processing /perception deficits immediately after acoustic trauma. [31]

Formally, if the physiological neuronal transfer function f(t), has been changed to a pathological g(f(t)), it is straightforward to apply g-1 to return to normal, since g-1(g(f(t)))= f(t). Oculists are used to this principle, routinely prescribing lenses that compensate for a particular bias of the eye. (For example, concave lenses remedy short-sightedness.) Compare this to sound therapy: Although it “is the preferred mode of audiological tinnitus management in many countries” and was proposed long ago, there is no consensus on the type of sound, its level, or the daily duration of use [32,33], pp.412,416-417

If one follows the ophthalmologic idea, then a certain type of tinnitus should be treated with appropriate ‘anti-sound,’ for instance a fitting type of colored noise. To this end, it seems helpful to classify tinnitus more precisely, for example according to tone frequency, volume, rate (how often, at what time of the day, under what circumstances do phantom sounds occur), quality (buzzing, rattling, hissing, etc.) and correlated deficits such as vertigo. ‘Precision medicine’ in the sense of defining small homogeneous subgroups and ‘deep phenotyping‘ [26] could make a difference at the bedside: The more detailed the clinical picture(s), the more specific a therapy can be [18].

On the other hand, general strategies are needed. Since noise and emotions are closely linked (just think of music), it is obvious that deafness and phantom sounds are a nuisance, in particular, if they persist. In other words, at least in the longer run, psychosomatic effects are to be expected: first, attention is directed towards irrelevant noise or towards deciphering faint sounds. Second, cognition is disturbed. One cannot focus on relevant tasks, and thoughts may circle around the acoustic impressions. Third, a lack of /excessive silence triggers restlessness, negative emotions and fears, but also disturbs sleep patterns and social life. Finally, all these factors combined may provoke a race to the bottom (i.e, a self-reinforcing negative development), which, in the worst case, leads to high levels of stress, depression, social isolation and, ultimately, inability to work.

To prevent all this from happening, it seems quite straightforward to first supplement a certain tinnitus with a similar, but less virulent, kind of noise. Second, the competition between the pathological and the artificially introduced signal could slowly be shifted in favor of the latter. (Learn to listen to your friend and not to your foe.) Third, the implemented sound should be modified, substituting, and in the best case completely eliminating, the incriminating noise.

Contemporary retraining and habituation therapies may be less specific, yet they proceed along similar lines [34]. Essentially, they also build on the brain’s plasticity to restructure the hearing process. To this end, patients are advised to get used to, and to create a distance from disturbing sounds. Rather than dealing cognitively with the symptoms, they should ’re-evaluate’ these sounds emotionally, and direct attention away from them. Accepting and tolerating phantom sounds helps to ignore and forget them: It is much more difficult to take no notice of an unnerving scream than to ignore some latent ‘neutral’ background rustling [35].

In other words, emotions, cognition and attention are retrained so as to ‘compensate’ for an acoustic disorder. Following this train of thought, it could pay to include other sense qualities, in particular, ‘emotional odor‘ [36,37] and to ‘reprogram’ neural networks quite directly, i.e., to treat a specific hearing disorder with an appropriate mixture of sound(s) and noise(s). The optimum seems to be an integrated therapy that reverses potential maladaptation in the auditory pathway and minimizes psychological stress.

Conclusions

Hearing is a complex and only partly understood process. Traditionally, focus has been on the ear and its vicinity. However, since the ear is merely a (sophisticated!) measurement device and the larger part of the analysis takes place in the brain, there is no escape from studying and modeling the entire process, leading from external noises to conscious sounds. [38,39,40], p.59 A basic factor is volume, which is directly proportional to energy. Therefore, it is no coincidence that loud noise is the major cause of damage to the hearing system. (The same with ultraviolet light and the visual sense.)

In recent years, detailed studies have broadened and deepened our understanding. In particular, imaging technology has made it possible to uncover anatomical trauma but also pathological neural activity. Therefore, it is to be expected that ‘subjective’ tinnitus will become observable, and that objective markers will make diagnosis more precise. Whereas deafness is a rather one-dimensional disease, tinnitus has many facets- one might even claim that each patient experiences different symptoms. Nevertheless, deafness, phantom sounds and hyperacusis are a common syndrome, with each of the symptoms reflecting a certain deficit of a damaged hearing system.

Obviously, most of the research on the hearing process and its pathologies should be conducted in medical schools. However, a more abstract view, such as the one elaborated in this paper, may also be helpful. Ultimately, hearing is a particular form of information processing, with signal detection at its centre. Therefore disciplines like the information sciences, statistics, and engineering, which have studied signal and noise in detail, should also have their say.

In particular, statistics suggests that the discrimination of meaningful sounds and irrelevant noise is crucial. In addition, engineering knows many technical tricks that help detect signals in a noisy environment: frequency analysis, various kinds of amplifier and filter, feedback, lateral inhibition, noise reduction, signal averaging and signal fusion, etc. It would be very surprising if the hearing system optimized by evolution did not use at least some of these strategies.

On the one hand, a better understanding of nature’s efforts may enhance our technology. On the other, insights gained in the construction of sensors may help uncover relevant causal mechanisms and explain in vivo processes. Furthermore, the more abstract theories of the information sciences and acoustics (e.g., about neural networks, information processing, filtering, signal detection and de-noising) may, at least in the long run, give the best clues as to how to treat hearing disorders efficiently and effectively.

Acknowledgments

The author would like to thank Dr Bernadette Talartschik (Schön Klinik, Bad Arolsen) for her excellent support and Dr Martin Kinkel (KIND Hörstiftung, Hanover) for his valuable suggestions.

References

- Wainstein LA, Zubakov, VD. Extraction of signals from noise. Englewood Cliffs, NJ: Prentice Hall. 1962.

- Simon D, Kalman H. Optimal state estimation and nonlinear approaches. Wiley. 2006.

- Stigler SM. Statistics on the Table. The History of Statistical Concepts and Methods. Harvard University Press, Cambridge, MA. 1999.

- Tukey JW. What have Statisticians been Forgetting? Chapter 14 (pp. 587-599) in Jones LV. (editor). The collected works of J. W. Tukey, vol. IV: ?Philosophy and Principles of Data Analysis: 1965-1986.'' Chapman & Hall, London. 1986;14:587-99.

- Bhatt JM, Lin HW, Bhattacharyya N. Prevalence, Severity, Exposures, and Treatment Patterns of Tinnitus in the United States. JAMA Otolaryngol Head Neck Surg. 2016;142:959-65.

- Fayez Bahmad JR, Carlos Augusto CP, Oliveira, Holdefer L. Tinnitus and Hearing Loss. Chapter 1, pp. 3-12, in Up to Date on Tinnitus, edited by Fayez Bahmad Jr, Intech Open. 1:3-12.

- Williams AH. Periodic Tales: The Curious Lives of the Elements. Viking. 2011.

- Gold T, Pumphrey RJ. The cochlea as a frequency analyzer. Proceedings of the Royal Soc B. 1948;135:462-91.

- Sacchinelli D. Want to listen better? Lend a right ear. Presentation given at the 174th Meeting of the Acoustical Society of America (New Orleans, Louisiana). 2017.

- Langguth, B, Kreuzer PM, Kleinjung T, De Ridder D. Tinnitus: causes and clinical management. The Lancet Neurology (Review). 2013;12:920-30.

- Dahmen JC, King AJ. Learning to hear: plasticity of auditory cortical processing. Current opinion in neurobiology. 2007;17:456-64.

- Solarana K, Liu J, Bowen Z. Lee HK, Kanold PO. Temporary visual deprivation causes decorrelation of spatio-temporal population responses in adult mouse auditory cortex. eNeuro. 2019; 10.1523/ENEURO.0269-19.2019

- Liberman MD, Liberman LD, Maison SF. Chronic Conductive Hearing Loss Leads to Cochlear Degeneration. PLoS ONE. 2015;10:e0142341.

- O?Keeffe MG, Thorne PR, Housley GD, Robson SC, Vlajkovic SM. Distribution of NTPDase5 and NTPDase6 and the regulation of P2Y receptor signalling in the rat cochlea. Purinergic Signal. 2010;6:249-61.

- Pilati N, Ison MJ, Barker M, Mulheran M, Large CH, Forsythe ID, et al. Mechanisms contributing to central excitability changes during hearing loss. Proc. Natl Acad Sci. 2012;109:8292-7.

- Zhuang X, Sun W, Matthew A, Friedman XU. Changes in properties of auditory nerve synapses following conductive hearing loss, J of Neuroscience. 2017;37:323-32.

- Baguley D, McFerran D, Hall D. Tinnitus, Lancet. 2013;382:1600-7.

- Norena AJ. Revisiting the cochlear and central mechanisms of tinnitus and therapeutic approaches. Audiol Neurootol. 2015;20:53-9.

- Shore SE, Roberts LE, Langguth B. Maladaptive plasticity in tinnitus-Triggers, mechanisms and treatment. Nat Rev Neurol. 2016;12:150-160.

- Norena AJ. An integrative model of tinnitus based on a central gain controlling neural sensitivity. Neurosci Biobehav Rev. 2011;35:1089-1109.

- Norena AJ, Eggermont JJ. Changes in spontaneous neural activity immediately after an acoustic trauma: implications for neural correlates of tinnitus. Hear Res. 2003;183:137-53.

- Schaette R, McAlpine D. Tinnitus with a Normal Audiogram: Physiological Evidence for Hidden Hearing Loss and Computational Model. The Journal of Neuroscience. 2011;31:13452-7.

- Roberts LE, Eggermont JJ, Caspary DM, Shore SS, Melcher JR, Kaltenbach JA. Ringing Ears: The Neuroscience of Tinnitus. Journal of Neuroscience. 2010;30:14972-9.

- Chen YC, Li X, Liu L, Wang J, Lu C, Yang M, et al. Tinnitus and hyperacusis involve hyperactivity and enhanced connectivity in auditory-limbic-arousal-cerebellar network. Elife. 2015;4:e06576.

- Koehler SD, Shore SE. Stimulus Timing-Dependent Plasticity in Dorsal Cochlear Nucleus Is Altered in Tinnitus. Journal of Neuroscience. 2013;33:19647-56.

- Szczepek AJ, Frejo L, Vona B, Trpchevska N, Cederroth CR, Aria H, et al. Recommendations on Collecting and Storing Samples for Genetic Studies in Hearing and Tinnitus Research. Ear and Hearing. 2018;40:219-26.

- Zheng Y, Guan J. Cochlear synaptopathy: a review of hidden hearing loss." J Otorhinolaryngol Disord Treat. 2018;1:1-11.

- Dadoo S, Sharma R, Sharma V. Oto-acoustic emissions and brainstem evoked response audiometry in patients of tinnitus with normal hearing. Int. Tinnitus Journal. 2019;23:17-25.

- Punte, A.K.; Meeus, O.; van der Heyning, P. Cochlear implants and tinnitus. In: M

Department of Economics and Social Sciences, Nordhausen University of Applied Sciences, Germany

Institution: Dr. Franz Frosch, Private Initiative Brummton, Bad Dürkheim, Germany

Send correspondence to:

Uwe Saint-Mont

Department of Economics and Social Sciences, Nordhausen University of Applied Sciences, Germany, Email: saint-mont@hs-nordhausen.de

Phone:+0049-3631-420-512

Paper submitted to the ITJ on December 20, 2019; and accepted on January 07, 2020.

Citation: Tinnitus: An Abstract View Emphasizing Signal, Noise, and Their Discrimination. 24(1):7-14.